Trustworthy Machine Learning in Biomedical Research

As machine learning becomes increasingly central to biomedical discovery and clinical decision-making, ensuring the reliability, fairness, and interpretability of these models is critical. In our lab, we are committed to developing and applying machine learning methods that are not only accurate but also trustworthy, meaning they are robust to noise, generalizable across datasets, transparent in their decision-making, and aligned with ethical and clinical standards.

Our work spans multiple aspects of trustworthy ML, including uncertainty quantification, model calibration, interpretability, fairness in predictive models, and robustness to distributional shifts. These components are especially important in healthcare, where decisions influenced by models can have direct consequences for patients.

In the context of multi-omics data, single-cell analysis, and quantitative imaging, we embed trustworthiness principles throughout the model development pipeline, from data preprocessing and integration to prediction and interpretation. This ensures that our computational outputs can be confidently used to guide biological insight and translational applications.

Model Calibration Under Distribution Shift

Current-generation neural networks exhibit systematic underconfidence rather than the overconfidence reported in earlier models, and demonstrate improved calibration robustness under distribution shift. However, post-hoc calibration methods become less effective or even detrimental under severe shifts. Our analysis across ImageNet and biomedical datasets reveals that calibration insights from web-scraped benchmarks have limited transferability to specialized domains, where convolutional architectures consistently outperform transformers regardless of model generation. This work challenges established calibration paradigms and emphasizes the need for domain-specific architectural evaluation beyond standard benchmarks.

Uncertainty Quantification for Classification and Applications

Reliably estimating the uncertainty of a prediction throughout the model lifecycle is crucial in many safety-critical applications. Since ML-based decision models are increasingly deployed in dynamic environments, understanding when and why a model might fail becomes as important as achieving accurate predictive performance. In our group, we focus on developing theoretically sounded methods for uncertainty quantification that remain robust across different applications, enabling more trustworthy and transparent AI systems.

Uncertainty Estimates of Predictions via a General Bias-Variance Decomposition (AISTATS 2023)

Proper scoring rules (e.g., Brier score or negative log-likelihood) are commonly used as loss functions in machine learning, as they are designed to assign optimal predictions to the target distribution. However, it remains unclear how to decompose these scores in a way that a component capturing the model’s predictive uncertainty arises. To address this, we derive a general bias-variance decomposition for proper scoring rules, where the Bregman Information (BI) naturally emerges as the variance term. This new theoretical insight has practical implications for classification tasks: since the decomposition applies to the cross-entropy loss, it allows us to quantify predictive uncertainty directly in the logit space (the standard output of neural networks) without requiring a normalisation step. Extensive empirical results demonstrate the effectiveness and robustness of this method, particularly in out-of-distribution settings. [pdf, repo]

How to Leverage Predictive Uncertainty Estimates for Reducing Catastrophic Forgetting in Online Continual Learning (TMLR 2025)

In many real-world scenarios, we want models to continuously learn new information without forgetting what they already know. In memory-based online continual learning, a key challenge is managing a limited memory buffer to mitigate catastrophic forgetting (CF) — but what is the best strategy for selecting samples to store in the memory? Under an uncertainty lens, we investigate what characteristics make samples effective in alleviating CF. Starting from the examination of the properties and behaviours of popular uncertainty estimates, we identify that they mostly capture the irreducible aleatoric uncertainty and hypothesise that a better strategy should focus on the epistemic uncertainty instead. To this end, we propose using Bregman Information – derived from our general bias-variance decomposition of strictly proper scores – as an effective estimator of epistemic uncertainty, leading to improved memory population strategy and reduced forgetting. [pdf, repo]

Federated Continual Learning Goes Online: Uncertainty-Aware Memory Management for Vision Tasks and Beyond (ICLR 2025)

Federated Continual Learning (FCL) is a powerful paradigm that combines the privacy-preserving benefits of Federated Learning (FL) with the ability to learn sequentially over time, as in Continual Learning (CL). However, catastrophic forgetting still remains a major challenge. Most existing FCL methods rely on generative models, assuming an offline setting where all task data are available beforehand. But in real-world applications, data often arrives sequentially in small chunks — a challenge that remains largely unaddressed. To address this, we introduce a novel framework for online federated continual learning. To address scenarios where storing the full dataset locally is impractical, we propose an effective memory-based baseline that integrates uncertainty-aware updates — based on Bregman Information — with random replay to reduce catastrophic forgetting. Unlike generative approaches, our uncertainty-based solution is simple to implement and adaptable across different data modalities. [pdf, repo]

Uncertainty Quantification for Generative AI

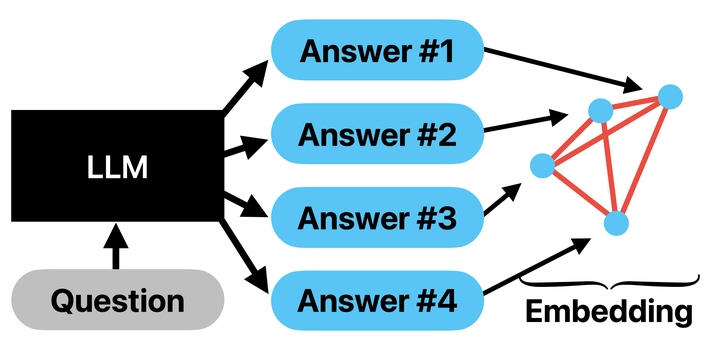

Generative AI models in general and large language models in particular have emerged as a disruptive technology that has been rapidly democratized. Their use in critical domains such as medicine, scientific research, and politics has raised serious concerns about reliability. Consequently, robust estimation of their uncertainty is essential to build trust and to prevent the potentially severe consequences of failures. We develop methods to quantify and calibrate their uncertainty across different modalities, while accounting for the specific characteristics of each modality.

A Bias-Variance-Covariance Decomposition of Kernel Scores for Generative Models (ICML 2024)

This paper tackles a core gap in generative AI: there’s no unified, theory-grounded way to assess generalization and uncertainty across modalities or closed-source models. We introduce the first bias–variance–covariance decomposition for kernel scores, yielding kernel-based measures that can be estimated directly from generated samples, without access to the underlying model. Because kernels work from samples alone and are computed based on vector representations of these samples, the framework applies uniformly to images, audio, and language. In experiments, the approach explains generalization behavior (including mode collapse patterns) and delivers stronger uncertainty signals, even for closed-source LLMs.