Probabilistic latent variable models

Latent variable models (LVMs) are a statistical tool to infer an unobserved, hidden state of a complex (e.g. biological) system based on observable data that is often high-dimensional. To this end, a high-dimensional dataset of correlated observations is reduced into a low-dimensional dataset of uncorrelated and interpretable latent variables. Probabilistic approaches allow for a principled way to disentangle distinct sources of variation and explicitly model dependencies between features as well as samples.

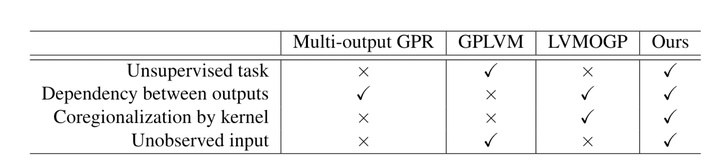

Accounting for dependencies between genes in LVMs

- Standard latent variable models only model dependencies between samples

- Can we make dependencies between features (genes) explicit?

- Use framework of Gaussian Process Latent Variable Models (GP-LVM)

- Probabilistic kernel PCA via GP regression with unobserved input

- Introduce kernel to model covariance between genes

- Learn latent variables for genes and samples and connect via Kronecker Product

- Apply to matrix completion tasks

Reference: Yang & Buettner, UAI 2021 (in revision)

Hierarchical autoencoders for Domain Generalisation

- Learn VAE to disentangle domain-specific information form class-specific information and residual variance

- Place Dirichlet prior on domain representation

- Learn “topics” that describe domain structure in unsupervised manner

- Interpretable model for unsupervised domain generalisation

Reference: Sun & Buettner, ICLR Workshop on robustML, 2021