Uncertainty-aware deep learning in the real world

Due to their high predictive power, deep neural networks are increasingly being used as part of decision making systems in real world applications. However, such systems require not only high accuracy, but also reliable and calibrated uncertainty estimates. Especially in safety critical applications in medicine where average case performance is insufficient, practitioners need to have access to reliable predictive uncertainty during the entire lifecycle of the model. This means confidence scores (or predictive uncertainty) should be meaningful not only for in-domain predictions, but also under gradual domain drift where the distribution of the input samples gradually changes from in-domain to truly out-ofdistribution (OOD). In healthcare, common examples of such domain shift scenarios are a patient demographic that changes over time or new hospitals in which a model is to be deployed.

Towards Trustworthy Predictions from Deep Neural Networks with Fast Adversarial Calibration

Here, we propose an efficient yet general modelling approach for obtaining wellcalibrated, trustworthy probabilities for samples obtained after a domain shift. We introduce a new training strategy combining an entropy-encouraging loss term with an adversarial calibration loss term and demonstrate that this results in well-calibrated and technically trustworthy predictions for a wide range of domain drifts. We comprehensively evaluate previously proposed approaches on different data modalities, a large range of data sets including sequence data, network architectures and perturbation strategies. We observe that our modelling approach substantially outperforms existing state-of-the-art approaches, yielding well-calibrated predictions under domain drift.

Reference: Tomani & Buettner, AAAI 2021

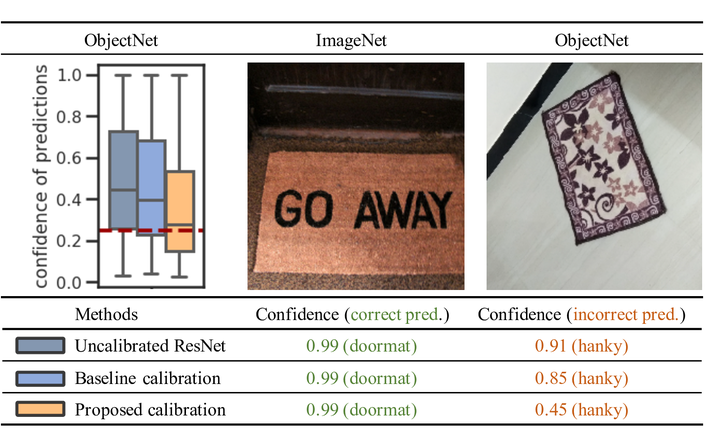

Post-hoc Uncertainty Calibration for Domain Drift Scenarios

We address the problem of uncertainty calibration. While standard deep neural networks typically yield uncalibrated predictions, calibrated confidence scores that are representative of the true likelihood of a prediction can be achieved using post-hoc calibration methods. However, to date the focus of these approaches has been on in-domain calibration. Our contribution is two-fold. First, we show that existing post-hoc calibration methods yield highly over-confident predictions under domain shift. Second, we introduce a simple strategy where perturbations are applied to samples in the validation set before performing the post-hoc calibration step. In extensive experiments, we demonstrate that this perturbation step results in substantially better calibration under domain shift on a wide range of architectures and modelling tasks.

Reference: Tomani, Gruber, Erdem, Cremers & Buettner, CVPR 2021 (oral presentation)

Parameterized Temperature Scaling for Boosting the Expressive Power in Post-Hoc Uncertainty Calibration

We address the problem of uncertainty calibration and introduce a novel calibration method, Parametrized Temperature Scaling (PTS). Standard deep neural networks typically yield uncalibrated predictions, which can be transformed into calibrated confidence scores using post-hoc calibration methods. In this contribution, we demonstrate that the performance of accuracy-preserving state-ofthe-art post-hoc calibrators is limited by their intrinsic expressive power. We generalize temperature scaling by computing predictionspecific temperatures, parameterized by a neural network. We show with extensive experiments that our novel accuracy-preserving approach consistently outperforms existing algorithms across a large number of model architectures, datasets and metrics.

Reference: Tomani, Cremers & Buettner, arxiv 2021 (in submission)